Physical AI: from vertical services to a self replicating robot economy

Exploring physical AI's inflections, bottlenecks, modular technology stack and venture opportunities.

This post explores Physical AI—the fusion of artificial intelligence with robotic embodiment. After a brief history of the theme we discuss its underlying inflections, bottlenecks, the rise of the physical AI stack as well as potential opportunities at the short and long term horizon.

As imagined by Midjourney: R2-D2 and a small droid floating in space, a technical drawing. Mid century sci fi art inspired.

A brief history

Physical AI refers to artificial intelligence embodied in physical agents (robots, smart machines) that can sense and act in the physical world. Their intelligence arises from continual interaction with their environment via sensors and actuators besides data and algorithms in the cloud.

The robotics revolution has long been in the making. Since the 1960s, we’ve seen waves of interest in robotics: from Unimate’s industrial arms to Rodney Brooks’ embodied cognition principles to DARPA’s autonomous vehicle challenges. Each era pushed boundaries—but also exposed limitations due to hardware cost, brittle AI, or unreliable sensing.

That is changing now. The convergence of cheap, powerful edge compute, high-fidelity simulation, algorithmic breakthroughs (think foundation models, general purpose robot intelligence), and macroeconomic shifts (like aging populations, reshoring, labor shortages) enable the next wave of physical AI. We expect this wave to unfold over the next 5-10 years. Autonomous drone swarms (from bee swarms to tank sized UGVs) for transport, warfare, surveillance all the way to industrial robots (micro factories, additive manufacturing, autonomous science) are underway today. The current stage of intelligent robotics is comparable to the 1950s of the information age - when machines could only run one specialised algorithm at a time like decrypting messages or steering missiles and before CPUs for general compute were invented in the 1980s. While it took humanity decades to unlock general purpose compute we have reason to expect to achieve general purpose robotics faster thanks to compounding flywheel effects across the stack.

“The material economy could autonomously make and assemble the parts required for more key parts of the material economy — extracting materials, making parts, assembling robots, building entire new factories and power plants, and producing more chips to train AI to control the robots, too. The result is an industrial base which grows itself, and which can keep growing over many doublings without being bottlenecked by human labour, visibly transforming the world in the process.”

Will MacAskill & Fin Moorhouse in Preparing for the Intelligence Explosion, March 2025

How much faster we will get from specialised services to a general purpose robotic economy is very hard to tell. In their AI 2027 Report, the AI Futures Project, a non profit think tank forecasting the future of AI expects economic growth to accelerate by about 1.5 orders of magnitude within a few years after super intelligence based on some historical precedence and trends. They also recognise that:

“Obviously, all of this is hard to predict. It’s like asking the inventors of the steam engine to guess how long it takes for a modern car factory to produce its own weight in cars, and also to guess how long it would take until such a factory first exists.”

With that off our chest, let’s explore what might be feasible today, what the underlying inflections and bottlenecks are before discussing some opportunities.

Inflections

A question that we ask ourselves at Inflection every day is "What are the profound shifts in technology, science and markets that are enabling novel behaviours at a vast scale?”

Technology

The hardware cost curves and AI capabilities have finally aligned. Critical components like LiDAR and edge computing have plummeted in price – LiDAR sensors that cost $75,000 in 2015 now cost under $7,500 (90% drop), with some automotive LiDARs targeting <$500. On board processing units (like NVIDIA Jetson) declined from $3,000 to $399. Ubiquitous connectivity (5G) and IoT infrastructure further enable distributed robots.

We expect the developments in software to positively affect developments in hardware, hence the hardware capability curve started to steepen. E.g. generative design, where AI models optimize mechanical parts based on goals like strength, weight, or thermal resistance. Foundation Models (like GPT-4 or Claude) are beginning to assist in hardware workflows—writing firmware, generating CAD models, or summarizing engineering specs to enable a shorter, cheaper and more iterative hardware loop. Katie Vasquez has been writing up a great summary piece going a bit deeper, AI and Physics-Based Modeling: A Force Multiplier for the Future of Hardware.

Over-simplified schematic illustration of how increased software capabilities (generative design tools, simulations, world models etc.) enable faster and cheaper hardware improvements, thereby steepening the “hardware capability” curve over time.

Meanwhile, algorithms have leapt forward from basic perception to deep learning and large “world models” that give machines a form of common sense about physical environments, all the way to neuro inspired approaches to learning. The software toolchain for developing and testing hardware has matured, with high-fidelity simulators and better developer tools lowering the barrier to entry. We will dive into this more under “The Physical AI stack” below.

Macro

After decades of outsourcing productivity to the East, the West has a productivity issue and with that a (industrial, military and compute) sovereignty issue. Productivity levels are the input for industrial capacity. Industrial capacity is the input for military capacity.

Source: World Bank

Due to declining populations across western countries (and most of the globe actually), additional productivity levels can only be rooted in technology growth. The “New Labor Economy” - manufacturing, logistics, healthcare, agriculture, construction, etc. – represent a $100+ trillion market (the “atoms” economy), vastly dwarfing the ~$11T digital economy of software and internet services.

In terms of industrial robotic installations China is leading and it controls the global supply of industrial robots with 47% (decreasing though for now). The US is leading in physical AI models and woke up to the risk of missing the boat. Europe needs to catch up on all front but has an exceptionally strong industrial base with deep process knowledge, especially in Germany.

https://ifr.org/img/worldrobotics/Press_Conference_2024.pdf

“The impact of this in robotics will be exponential compared to their last strategic industry captures. These will be robotics systems manufacturing more robotics systems, and with each unit produced the cost will be driven down continuously and the quality will improve, only strengthening their production flywheel.”

https://semianalysis.com/2025/03/11/america-is-missing-the-new-labor-economy-robotics-part-1/

Unblocking the future

Despite the strong tail winds mentioned above there are several non-technical bottlenecks robotics innovators are facing. Overcoming them will be cumbersome and time consuming, especially the ones outside the entrepreneur’s sphere of influence.

Cultural resistance: Robots often face resistance due to job displacement fears and cultural discomfort. Labor unions, policymakers, and conservative industries (like healthcare or education) often push back on automation. Even military adoption of autonomous systems faces deep ethical scrutiny.

Regulation: liability is often unclear - is it the manufacturer, the operator or the software provider? The complexities faced by Tesla and Wayve around insurance and liabilities are similar in other verticals, depending on exposure and risk profiles.

Standards & Interoperability: There is no equivalent of USB or TCP/IP for robotics as the industry relies on proprietary SDKs, control stacks or custom comms protocols. Components are rarely interoperable, software needs to be re-written from scratch for each hardware platform.

Distribution: unlike for mobile apps or books there is no clear distribution layer for robotics in place. Industrial sales cycles are long, trust-intensive, and vertical-specific.

Deployment: is complex and requires specialised, scarce knowledge around simulations and edge AI operations.

There might be many more bottlenecks to be overcome. At the end of the day we believe that the inflections and tail winds will out-weigh the resistance in terms of culture and consequently regulation. All other challenges can and will be solved by entrepreneurs, not bureaucrats.

Rise of the physical AI stack

Physical AI Stack – an overview from the foundational hardware at the bottom to high-level applications at the top:

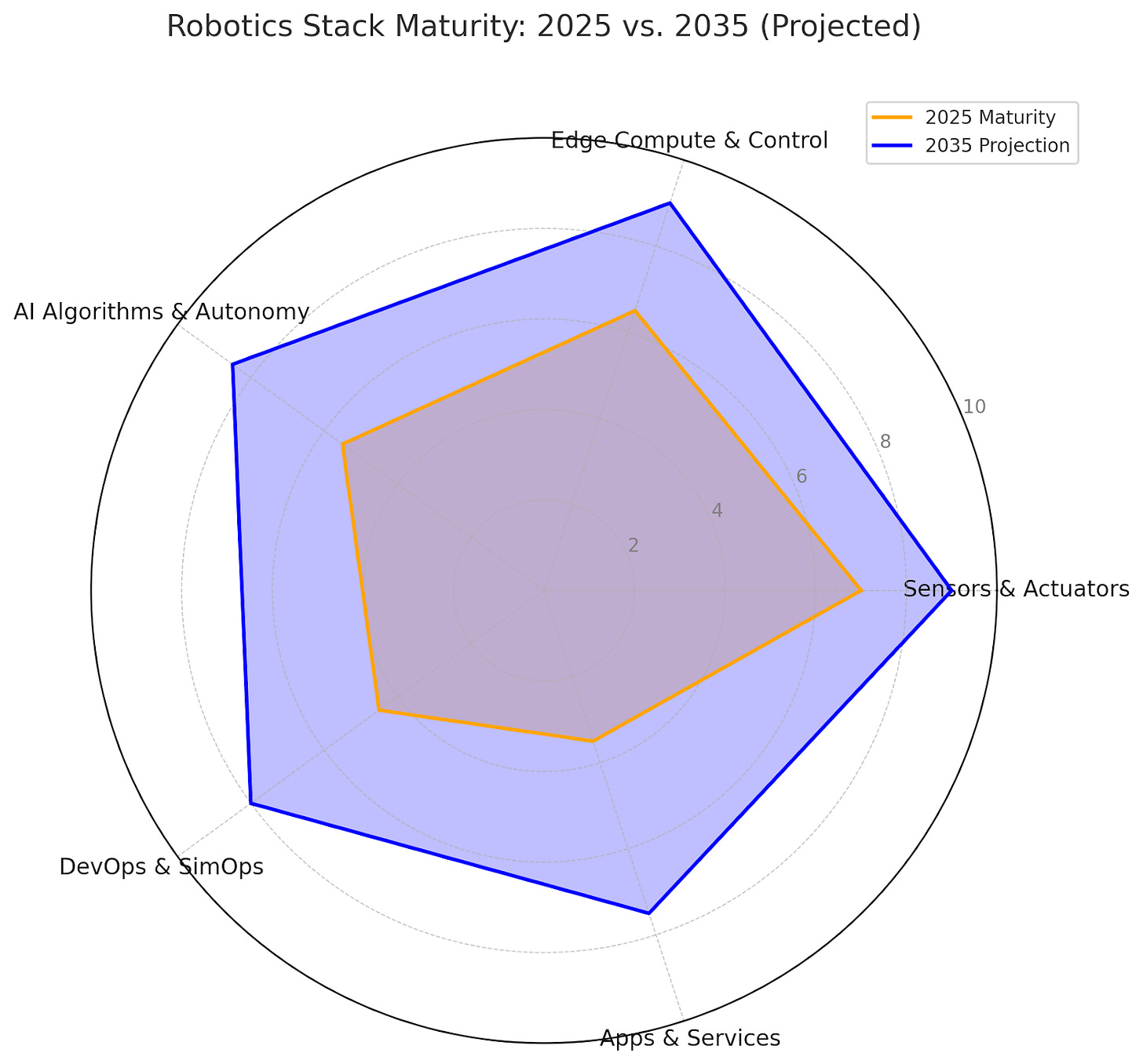

This is how technology maturity looks like today (2025) and how it might look like in about a decade from now (2035). Finding proxies for technology maturity and tipping points is hard. TRL needs a refresh as pointed out very coherently by Nathan Mintz. We tried to synthesize maturity levels from proxy data on manufacturing readiness, adoption levels, integration maturity, commercialisation and open standards adoption. Open AI’s Deep Research Model helped alongside feedback from various researchers and practitioners. To be enjoyed with a huge pinch of salt.

Here are the definitions:

Concept Only: Idea under academic or speculative discussion.

Early Research: Limited experiments, no real-world implementation.

Prototype in Lab: Exists in testbeds or research demos.

Lab Validated: Components tested under realistic, but controlled conditions.

Pilot-Ready: Used in small-scale field trials, not broadly reliable.

Deployed in Niche Use-Cases: Market exists, but only in verticalized settings.

Interoperable + Scalable: Standardized and modular; integrates with existing infrastructure.

Ecosystem-Integrated: Supported by dev tools, APIs, and external vendors.

Industry-Standard: Robust, widely deployed, with supply chain maturity.

Invisible Infrastructure : Ubiquitous, trusted, plug-and-play (e.g. like Wi-Fi, USB).

Sensors & Actuators (2025: 7 → 2035: 9)

Mature industrial base: depth cameras (Intel RealSense), LiDAR (Ouster, Hesai), force-torque sensors, and servo motors are widespread and commoditized. Bottlenecks remain in multi-modal fusion (sensor latency and integration) and in cost/power constraints for mobile platforms. Actuators are robust in industrial robots (ABB, Fanuc), but underpowered or inefficient in other areas.

Edge Compute & Control (2025: 6 → 2035: 9)

Edge AI hardware (e.g., Jetson Orin, Google Coral) is powerful and affordable, but thermal, power, and ruggedization (hardening systems to operate reliably under harsh environments, e.g. extreme temperature, vibration, dust etc.) are still issues. Real-time control stacks (e.g., ROS2 + DDS) are improving but remain brittle at scale. Hard real-time industrial controllers are mature, but they lack flexibility for general-purpose autonomy. Balancing compute loads between onboard and cloud remains a challenge with regards to latency and reliability. Analogies can be drawn from Daniel Kahneman’s Thinking Fast and Slow where different systems are responsible for different types of decision making, just like in human brains and nervous systems. NVIDIA has been pioneering the three computer framework spanning AI training, simulation and on board resources but many questions remain open.

AI Algorithms & Autonomy (2025: 5 → 2035: 8)

This layer is the most software centric. It caught a lot of attention and funding recently and spans key functions of perception, localization, decision / planning and learning / adaptation. The below is an attempt to break down a vast category with overlapping problem spaces.

Perception: Interpreting raw sensor data to understand the environment. Perception tells the machine about the world as it currently is given its sensor experience. For example, computer vision models detect and classify objects from camera images (using CNNs or now even vision transformers), while SLAM (Simultaneous Localization and Mapping) algorithms build 3D maps from lidar or camera data. Modern robots often have a perception stack that fuses multiple sensors to output a coherent representation of the world.

Localization: Determining the robot’s own position and orientation in the world. This can involve sensor fusion of IMU (inertial measurement unit), GPS and visual cues. Accurate localization underpins autonomy – whether it’s a vacuum cleaner knowing its position in a home, or an autonomous car pinpointing itself on a map.

Decision and Planning: Given a goal (like “move from A to B” or “pick up that object”), the AI must decide on a sequence of actions. This involves motion planning algorithms, e.g. to find a route for a self driving car as well as higher-level task planning, e.g. deciding in what order to pick items in an order fulfillment task. Low-level control algorithms ensure the planned actions are executed by the actuators.

Learning and Adaptation: What sets “AI” apart is the ability to learn from data and improve. Many Physical AI systems use machine learning models – from vision to control – that are trained on data in simulations.

World models are an attempt to leverage neural nets to model the physics of the world by simulating environments. They enable accurate predictions about how the world will change given a choice of actions. This is critical for proper learning and reveals a technology gap because most dominant approaches are policy or imitation based, neither of which doing anything that resembles planning. NVIDIA’s Cosmos or Google’s Genie teams (see a great overview here) have been working on this problem for years.

Another frontier are embodied foundation models focused on general purpose AI for multi task robotics, e.g. Google’s RT-2, Physical Intelligence’s π0). They output task specific actions (like grasping objects) and serve as base models for downstream applications.

In their Welcome to the Era of Experience paper David Silver and Richard S. Sutton explain how agents will acquire superhuman abilities by learning predominantly from experience (think reinforcement learning) as opposed to simulations or imitation learning which are limited by data bottlenecks and and don’t really make machines intelligent:

“The era of human data offered an appealing solution. Massive corpuses of human data contain examples of natural language for a huge diversity of tasks. Agents trained on this data achieved a wide range of competencies compared to the more narrow successes of the era of simulation. (...) However, something was lost in this transition: an agent’s ability to self-discover its own knowledge. In the era of experience, agents can inhibit streams of experience rather than short snippets of interaction. Their actions, observations and rewards will be grounded in the environment rather than human dialogue.”

Welcome to the era of experience, Fig 1 (highlighted by the author)

Following this line of thinking we expect to see entirely new, experience based models rise that depend less on static simulation or human interaction. One of them is called decentralized sensory learning and is inspired by living nervous systems. Every signal a “sensor cell” receives (think a change in temperature) is a problem to be solved. When the machine acts in a way that makes those signals smaller by moving towards lower temperature for example, it learns. This approach is discussed in A Foundational Theory for Decentralized Sensory Learning and implemented by Intuicell. Similarly, Noumenal is combining an “experienced physics” approach with a library of behaviours robots can pick from (on edge or loaded from the cloud) to dynamically interact with their environment.

Despite all this progress generalization across form factors/tasks remains very challenging. Unpredictable environments (mind the slippery floor!) are an unsolved problem. Cobot’s Brad Porter wrote a great in depth piece for more context: The Business of Robotics Foundation Models

DevOps & SimOps (2025: 4 → 2035: 8)

Robotics is still in its “DevOps infancy.” Simulation engines like Isaac Gym, MuJoCo, Brax, and Unity Robotics are powerful, but workflow tooling is fragmented. No GitHub/Hugging Face-style hubs for simulation versioning, scenario benchmarking, or real-world model validation exist yet. The support layer to handle cloud management, Continuous Integration analogous to MLOps is lacking. Until recently the sim-to-real gap seemed to be a critical bottleneck to overcome as it has been more art than science: AI models have been first tested in simulation, then deployed on physical robots; real-word data and outcomes have been collected to refine the models and the simulator to improve the next cycle’s performance. sim → train → deploy → learn → improve sim. This approach could turn into a self-reinforcing flywheel if managed well (think “physics-as-a-software” feedback loop). This problem might be solved by novel approaches to learning and adaptation as discussed above. However, sensory accuracy and messy environments (dust, fog, noise) might still be challenging to overcome.

Apps & Services (2025: 3 → 2035: 7)

There’s no “robot app store” or SDK ecosystem akin to iOS/Android available yet. Most robots are closed systems or require firmware-level dev work. Modular skill deployment (e.g., “fold laundry,” “pick lettuce”) is rare. Commercial APIs exist (e.g., for drone fleet ops), but only in tightly verticalized domains.

The TLDR is that the physical AI stack is still in its infancy. Basic hardware components like sensor and edge compute infrastructure made huge leaps over the last few years with AI algorithms and autonomy catching up quickly. Higher levels of abstraction like DevOps / SimOps or Apps & Services are under developed but expected to take off as the lower levels mature.

Opportunities

Over the last few years physical AI is seeing a narrative shift based on the above inflections, media presence and significant funding rounds especially in the autonomy software category.

Yet, there is a delta between insider and outsider perception creating an arbitrage opportunity for venture investors and entrepreneurs alike - at least for those who know what they are looking for. Jordan Nel put it well in Robotics: a product selection problem:

It seems the consensus outside-robotics take is “capex heavy, hard to scale, small TAM”, the consensus inside take is “scaling laws hold, full autonomy, humanoids, El Segundo, lab-spinouts, 1:1 domain-transfer from LLM learnings”.

At Inflection we like to take high convex positions where we assign a higher probability of success to an opportunity than the market consensus while optimising for fat tail outcomes. We also take our first-check mandate into account which keeps us disciplined and focused on specific opportunities.

Robotic AGI

As discussed above (AI algorithms & autonomy) this is the frontier in physical AI these days. It is dominated by rock star robotics entrepreneurs straight out of the leading labs with backing from large, multi stage funds. Therefore, a hyper competitive opportunity set very few micro funds like us should compete in.

Besides strong market signals we currently don’t think that this category will be particularly lucrative. Drawing analogies to the model wars in LLM foundation models we expect to see a very fragmented landscape of hierarchically organised models to power the robotic brains of the future. Depending on the task at hand a combination of different models will be used to optimise for various trade offs. Further, we struggle to imagine how those businesses can create real moats, parallel to Google’s “We have no moat, neither does OpenAI”. Defensibility could be increased through (1) control of distribution (e.g. marketplaces for collections of behaviours) or (2) vertical integration of robotic AGI (what NVIDIA seems to go after).

We’d be very keen to explore novel approaches bringing the experiential era to life.

Vertically integrated services

Based on the constraints discussed above we believe that vertically integrated specialist companies have more appeal in the near term. Here are some of the high level patterns we are looking for:

Hair on fire problem in a blue ocean / fragmented market: The problem set at hand should be urgent and existential for customers with no or only insufficient alternative solutions available. Critical industries that are typically fragmented and didn’t benefit much from automation over the last decades might hold more attractive opportunities for start-ups. Reducing human exposure to hazardous or dangerous locations increases urgency in general. Some examples:

Military: ARK* for fleet control in autonomous drone warfare; NAD* for autonomous counter UAV; Laelaps for autonomous physical security; Radical* for cell towers and eyes in the stratosphere.

Construction: Built Robotics for solar construction, Cosmic for critical infra maintenance, Shantui bulldozers, MAX for rebar tying. Raise Robotis and Monumental for on site construction.

Inspection, Maintenance: Koks for silo cleaning; Nautica for under water inspection.

Space: Lodestar* for autonomous object manipulation to secure the space domain, Motive Space Systems for in space servicing, assembly and manufacturing.

Science: Trilobio for whole-lab automation in syn bio, LabLynx and Sapio for Laboratory Information Management Systems).

Capability centric: most customers aren’t interested in buying robots (those who do are large industrials) but full blown capabilities. They want to buy a service that can be easily integrated into their operations. E.g. the Military has no interest in buying hardware components from X and software from Y to put them together. Instead they need fully fledged, working solutions.

Lower cost: common pitfalls for robotics companies have been high R&D and up front CAPEX spending. As described in the Inflections section, those cycles started compressing significantly. Off the shelf hardware components, additive manufacturing techniques and intelligent simulation and CAD software drive down costs and increase velocity. Simple hardware form factors play a large role because hardware is expensive and complex hardware is very expensive. Humanoids (Figure, Tesla) don’t make sense for most use cases (high center of mass, wheels are simpler and cheaper than legs). From a customer’s perspective the service should be significantly cheaper than the next best alternative.

Defensibility: economies of scale can create strong moats but require deep integration and thereby time. Software and or data enabled network effects should be actively pursued as hardware alone will commoditise quickly. Robotics data is still a critical bottleneck to be overcome, e.g. environmental (presence sensing for collaborative robots, air quality, temperature, 3D spatial maps to avoid collisions or other accidents) or robot internal (joint angles, velocity, pressure of grippers, maintenance logs, balance etc.).

Micro Factories

Microfactories are highly automated, small-to-medium-scale manufacturing facilities designed to produce low volumes of products with high flexibility and efficiency. Unlike traditional factories that rely on mass production and large-scale infrastructure, microfactories use advanced technologies—such as robotics, artificial intelligence (AI), and digital fabrication tools (e.g., 3D printing, CNC machines)—to enable agile, on-demand, and often localized manufacturing.

Micro factories can provide an alternative, horizontal infrastructure to manufacture machines of all kinds as they allow for adaptive process management and the fast redirection of materials and distribution if needed. Their modular and distributed design increases supply chain resilience and flexibility.

This category is still very early in its development. Companies like Bright Machines (end to end automation suite for manufacturing), Isembard (franchise network for machine shops) or Arrival (electric vehicles) are early pioneers. As indicated in the introduction we might see a “industrial explosion” accelerated by embodied AI feedback loops.

“During World War II the United States and many other countries converted their civilian economies to total war economies. This meant converting factories that produced cars into factories that produced planes and tanks, redirecting raw materials from consumer products to military products, and rerouting transportation networks accordingly. (...) Roughly speaking, the plan is to convert existing factories to mass-produce a variety of robots (designed by superintelligences to be both better than existing robots and cheaper to produce) which then assist in the construction of newer, more efficient factories and laboratories, which produce larger quantities of more sophisticated robots, which produce even more advanced factories and laboratories, etc. until the combined robot economy spread across all the SEZs is as large as the human economy (and therefore needs to procure its own raw materials, energy, etc.)”

AI 2027 Report, Exhibit U - Robot Economy Doubling Times; (highlighted by the author)

Timelines remain very hard to predict but we expect fast adoption particularly in NATO defence applications where urgency is very high and local procurement laws often require “local manufacturing” of critical components. Think drone micro factories near front lines etc.

Picks and Shovels

The complementary approach to vertically integrated services would be horizontal services and components like sensors, chips, simulators, data platforms or “SimOps” infrastructure that those doing the deployment will need (Cogniteam for robotics cloud), Marketplaces for robotic data sets and behavioural libraries might fall into this category. Specialised edge AI silicon (Ubitium*) and resource orchetration or next generation sensing (Xavveo for synthetic aperture radar; Singular Photonics and Pixel Photonics for single photon detection; Qurv for wide spectrum image sensing) etc.

As the ecosystem matures, we expect some consolidation: shovel companies partnering with or being acquired by vertically integrated services companies who need their tech, and vice versa (a robot company open-sourcing some tools once they build their own, commoditizing that layer).

*) Inflection portfolio company

If you are working on related companies solving any of the hard problems mapped out in this piece, we’d love to hear from you!

A big *thank you* to Prof. Jeff Beck from Noumenal, Felix Neubeck from Playfair, Viktor Luthman from Intuicell and Sophia Belser from Laelaps for critical feedback and ideas. My gratitude also goes to the many dozens of founders and researches who spent time with the Inflection team discussing the above themes over the last few years.